technical description:

Development:

The project's technical core will consist of the recoding and augmentation of a standard multiplayer first-person videogame for use in performative/theatre setups, involving custom interface design, the combination of a 3D-virtual space with live video feeds and sound, and staging this in a sitespecific set. Through augmenting the game-space with live webcam feeds we can shift between levels of representation; from on-stage performer to in-game avatar, and play with encounters of the real (video) in the virtual. Deconstructing the phenomenology of viewing, the audience's perception of the narrative is manipulated with time delay, projections, and virtual replication of physical space to behave as a high-tech, two-way mirror. The backdrop which suggests the virtual decorum becomes a performer itself, part controlled by sensory interface objects on-stage, to be intervened with as the narrative unfolds – and complemented by a soundscape made up of live sounds and triggering of samples by the performer and her surroundings. The toolset we use is made up out of a combination of a 3D game-engine (ioQuake3 and possibly Ogre3D), video-streaming software (Icecast, DVGrab and FFMpeg), and PureData signalprocessing for the controllers/interface and part of the soundscape. Currently we're looking to develop the webcam implement in the game-engine through a combination of Intel's OpenCV (Open Computer Vision) libraries and the ARToolkit (Augmented Reality Toolkit). Without getting too technical, the OpenCV library allows us to get the raw data from a webcam which can then be splitted to a texture in the game-level. Then, we can use the ARToolkit to engage with the physical objects on-stage through making virtual representations (3D models) of them. This application then involves the overlay of virtual imagery on the real world. Here, the essence of our contemporary labyrinthine construction comes into being; The physical manifestation of a live performer, represented through a videostream in a virtual world – while interacting with physical objects and their virtual representation on yet another level.

The project's technical core consists of the recoding and augmentation of a standard multiplayer first-person videogame for use in performative/theatre setups, involving custom interface design, the combination of a 3D-virtual space with live video feeds and sound, and staging this in a site-specific set.

explaination:

Through augmenting the game-space with live webcam feeds we can shift between levels of

representation; from on-stage performer to in-game avatar, and play with encounters of the real (video) in the virtual. Deconstructing the phenomenology of viewing, the audience's perception of the narrative is manipulated with time delay, projections, and virtual replication of physical space to behave as a high-tech, two-way mirror.

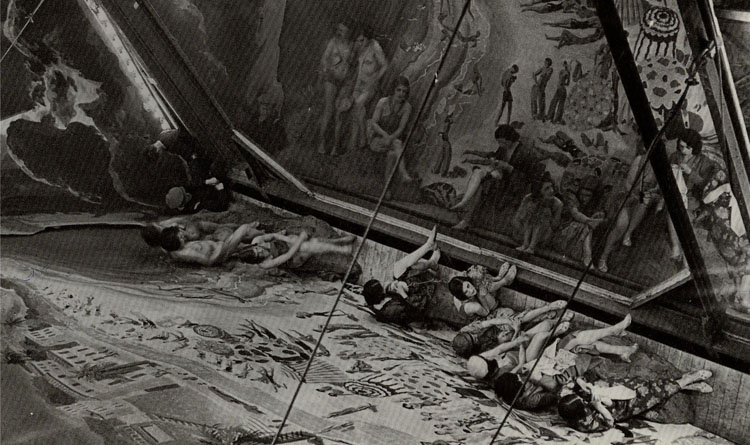

The backdrop which suggests the virtual decorum becomes a performer itself, part controlled by sensory interface objects on-stage, to be intervened with as the narrative unfolds - and complemented by a soundscape made up of live sounds and triggering of samples by the performer and her surroundings.

The toolset we use is made up out of a combination of a 3D game-engine (ioQuake3 and possibly Ogre3D), video-streaming software (Icecast, DVGrab and FFMpeg), and PureData signal-processing for the controllers/interface and part of the soundscape.

Currently we're looking to develop the webcam implement in the game-engine through a combination of Intel's OpenCV (Open Computer Vision) libraries and the ARToolkit (Augmented Reality Toolkit). Without getting too technical, the OpenCV library allows us to get the raw data from a webcam which can then be blitted to a texture in the game-level. Then, we can use the ARToolkit to engage with the physical objects on-stage through making virtual representations (3D models) of them. This application then involves the overlay of virtual imagery on the real world.

Here, the essence of our contemporary labyrinthine construction comes into being; The physical manifestation of a live performer, represented through a videostream in a virtual world – while interacting with physical objects and their virtual representation on yet another level.

Development:

The project's technical core will consist of the recoding and augmentation of a standard multiplayer first-person videogame for use in performative/theatre setups, involving custom interface design, the combination of a 3D-virtual space with live video feeds and sound, and staging this in a sitespecific set. Through augmenting the game-space with live webcam feeds we can shift between levels of representation; from on-stage performer to in-game avatar, and play with encounters of the real (video) in the virtual. Deconstructing the phenomenology of viewing, the audience's perception of the narrative is manipulated with time delay, projections, and virtual replication of physical space to behave as a high-tech, two-way mirror. The backdrop which suggests the virtual decorum becomes a performer itself, part controlled by sensory interface objects on-stage, to be intervened with as the narrative unfolds – and complemented by a soundscape made up of live sounds and triggering of samples by the performer and her surroundings. The toolset we use is made up out of a combination of a 3D game-engine (ioQuake3 and possibly Ogre3D), video-streaming software (Icecast, DVGrab and FFMpeg), and PureData signalprocessing for the controllers/interface and part of the soundscape. Currently we're looking to develop the webcam implement in the game-engine through a combination of Intel's OpenCV (Open Computer Vision) libraries and the ARToolkit (Augmented Reality Toolkit). Without getting too technical, the OpenCV library allows us to get the raw data from a webcam which can then be splitted to a texture in the game-level. Then, we can use the ARToolkit to engage with the physical objects on-stage through making virtual representations (3D models) of them. This application then involves the overlay of virtual imagery on the real world. Here, the essence of our contemporary labyrinthine construction comes into being; The physical manifestation of a live performer, represented through a videostream in a virtual world – while interacting with physical objects and their virtual representation on yet another level.

The project's technical core consists of the recoding and augmentation of a standard multiplayer first-person videogame for use in performative/theatre setups, involving custom interface design, the combination of a 3D-virtual space with live video feeds and sound, and staging this in a site-specific set.

explaination:

Through augmenting the game-space with live webcam feeds we can shift between levels of

representation; from on-stage performer to in-game avatar, and play with encounters of the real (video) in the virtual. Deconstructing the phenomenology of viewing, the audience's perception of the narrative is manipulated with time delay, projections, and virtual replication of physical space to behave as a high-tech, two-way mirror.

The backdrop which suggests the virtual decorum becomes a performer itself, part controlled by sensory interface objects on-stage, to be intervened with as the narrative unfolds - and complemented by a soundscape made up of live sounds and triggering of samples by the performer and her surroundings.

The toolset we use is made up out of a combination of a 3D game-engine (ioQuake3 and possibly Ogre3D), video-streaming software (Icecast, DVGrab and FFMpeg), and PureData signal-processing for the controllers/interface and part of the soundscape.

Currently we're looking to develop the webcam implement in the game-engine through a combination of Intel's OpenCV (Open Computer Vision) libraries and the ARToolkit (Augmented Reality Toolkit). Without getting too technical, the OpenCV library allows us to get the raw data from a webcam which can then be blitted to a texture in the game-level. Then, we can use the ARToolkit to engage with the physical objects on-stage through making virtual representations (3D models) of them. This application then involves the overlay of virtual imagery on the real world.

Here, the essence of our contemporary labyrinthine construction comes into being; The physical manifestation of a live performer, represented through a videostream in a virtual world – while interacting with physical objects and their virtual representation on yet another level.